In this blog post we will take a look how we can make product data enrichment easier (and more fun!) by using product images. Product content managers often have huge numbers of SKUs that come in every day, how do you decide which ones to enrich? Seasons come and go and so do product collections. Attributing each and every product is a very time consuming task, especially because it is often done manually. Attributing manually is not a particularly fun job and therefore often ends up at the bottom of the pile of work.

85% of shoppers surveyed say product information and pictures are important to them when deciding which brand or retailer to buy from.

- source

What if there's a need for an extra attribute, say there's a new trend and you want to inform your customers about it. For example, which of your shoes has a chunky boot? Would you like to go through the whole catalog to fill in that one attribute?

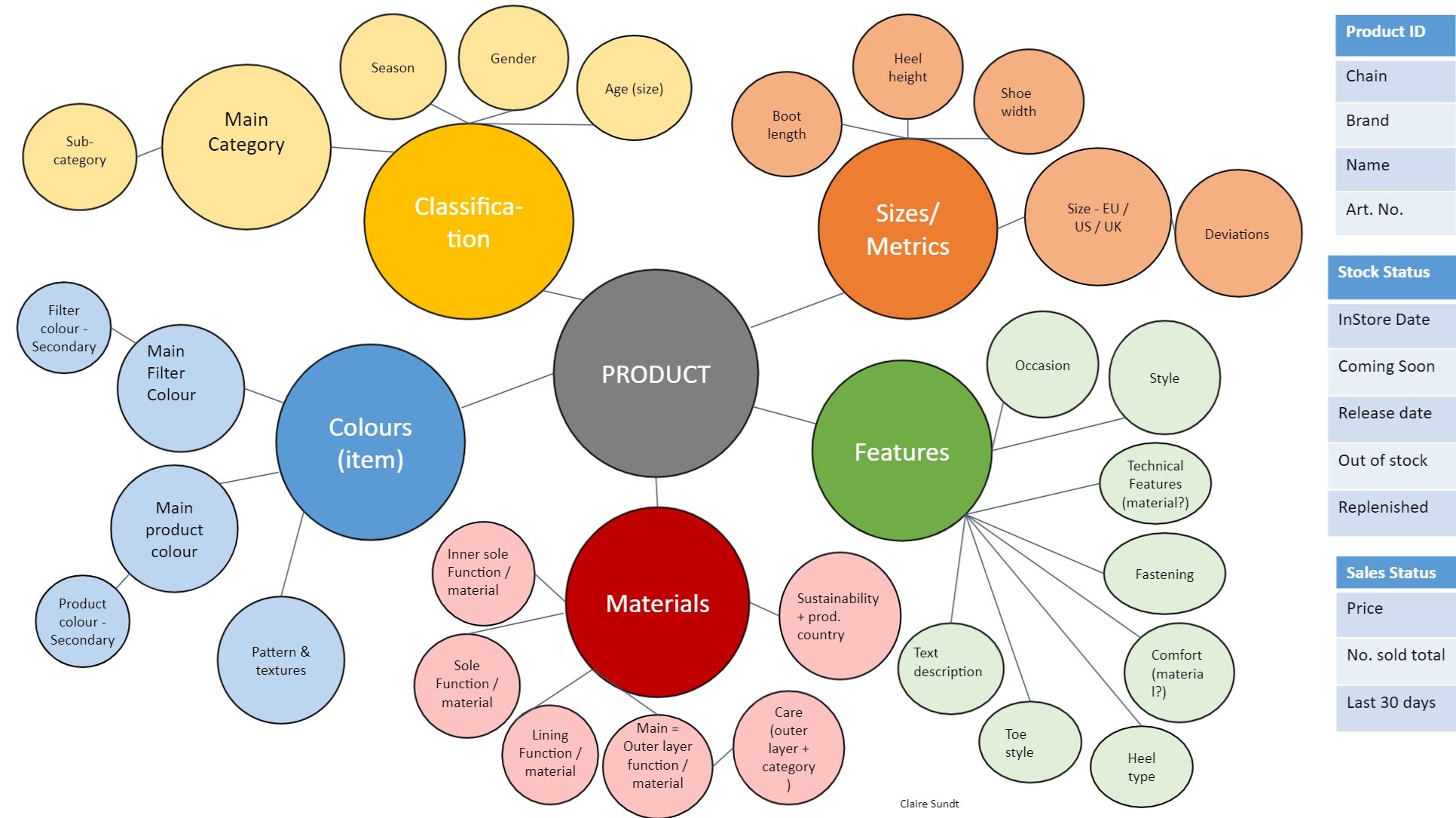

Product attributing is the process of tagging products with information about their attributes, such as color, material, heel height, occasion or type of closure. The process of enriching product data often is a manual process. This process is very time consuming and prone to human error and lacks the flexibility and repeatability to make changes afterwards.

We will take a look at why product attributing is important and how we can automate this time-consuming task. At the end of the article, you can try out our demo yourself.

Why: Product attributing is important

Enriching your product data increases the brand image of your store and stimulates customers to purchase a product from you, it may even help lower returns as it's more clear what to expect from the product.

Some products are sold by many others, if you get your product data directly from a manufacturer you're probably aware that other stores get the same information as you. When many stores sell the same product, enriching product data is the perfect way to set you apart from the rest.

And when we look at analytics; better understanding the needs of your customers you can improve your product offering. To summarize, enriching product data can:

- Improve your brand image

- Provide high quality information about your products

- Improve search because customers can filter and find products in a better way

- Recommend similar products that customers might be interested in

- Better understand the needs of your customers and improve your product offering

Automated product attributing is the process of assigning attributes to products automatically. It is a time-saving and accurate process that can free up resources and free up time for more important tasks.

How: Automated product attributing

First we will assess the quality of the data we're working with. Do we have decent product images to extract data from? What kind of product images are available: product-only, environmental / lifestyle, inspirational images, detail/texture images. Each of the product image types will display the product in a different way, and it can impact the kind of information we can extract from them.

Product-only images are the most straightforward to work with. They are exclusively meant for displaying the product, and can show the product in all its glory.

Environmental / lifestyle images are often harder to work with than product-only images. They capture the product in a broader context, and often include people, other objects, and backgrounds.

The great thing about lifestyle images is that they tell a story about the product. They are often used to show the product in use, or to show how the product can be used in conjunction with other things.

In terms of extracting data from these images, we can use a lot of the same techniques as we use for product-only images. The caveat is that we will have to use a lot more machine learning techniques to make sure we get the best product from these images.

For now we'll stick to product-only images as it is important that the image contains a high quality representation of the attribute we want to capture. For instance, if we want to capture the color of the product, we need to have a picture that shows the color in a clear and visible way. As a general rule of thumb, consistent images with the least distractions are best.

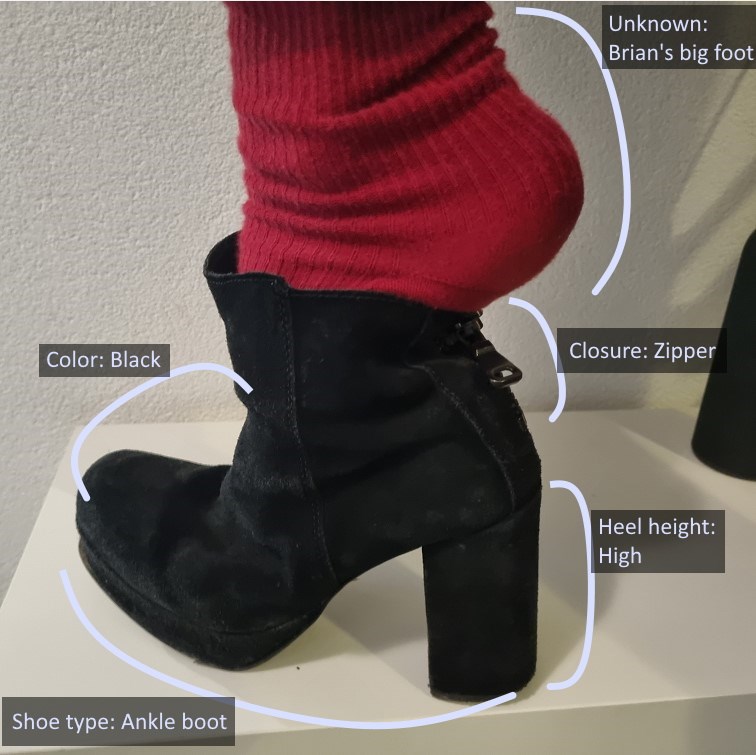

For this blog I will try to extract three attributes we want to extract from the images: color, product type (category), and heel height. And even though these attributes seem fairly clear, their values can still be ambiguous, which we will see further down the post. Therefore they do offer a nice challenge.

Image by Claire Sharp Sundt from Geta

Zero shot classification

We'll start with some exploratory testing by using a big pre-trained model to do zero shot classification. This means we'll not be training anything ourselves, just use the pretrained model as-is. We'll be using CLIP: is a multi-modal neural network trained to learn visual concepts from images and their corresponding captions. Their dataset contained 15 million images and should contain a lot of general knowledge about anything it trained on. These images are not focused on fashion or retail at all, so I wonder if the concepts captured actually transfer to fashion images.

CLIP is an interesting multi-modal approach; it allows you to find the similarity between text and images. For example find the best caption for an a certain image or find the best image for a certain caption.

Photo by hanen souhail on Unsplash

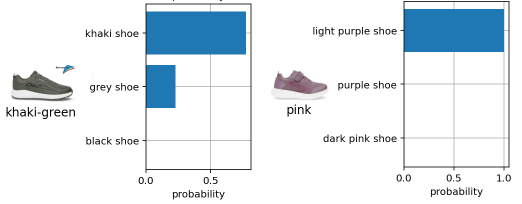

We will use it to rank the best "caption", where the captions are the classification labels for our attribute. First we'll start by matching images with colors. For this I've defined a list of available color values:

black, white, red, green, blue, yellow, brown, beige, grey, pink, purple, multi-color

Look at that, it actually classified 10 out of 12 shoes correctly. Just the khaki green and the pink shoe have the wrong label. So what happened there? If you look at the image it does make sense, the pink is quite dark, which does make it look like a light purple. Khaki in itself is quite the unique color, perhaps pretty quite close to grey. I tried adding some extra color labels and now it does label khaki as the color:

So this is where we start to see some of the aforementioned ambiguity, color labels and color groups are not very clearly defined. It depends on external factors (the light) and you may want to group different colors together.

Let's move on to the next feature: the product type. I expect this to be more of a challenge, but CLIP probably has been trained to learn about different shoe types, as the shoe type is something you'd expect to find in a caption. We will try to narrow down the product type a lot, not just identifying 'boot' or 'shoe' but trying to label it using these very specific labels: low ankle boot, chelsea boot, formal shoe, slip-on shoe, slippers, sneaker, loafer / moccasin

Not bad, I think it labeled 9 out of 12 correctly. For some reason some of the sneakers are identified as a formal shoe or a slip-on shoe. And I expected the formal boot to be a low ankle boot, but my knowledge of shoes is pretty limited :P

Lastly let's move on to the most challenging feature of them all: the heel height. The reason this is the hardest feature (for zero-shot classification) is that I don't expect this information to be found in the image caption very often. Remember that it has been trained on images and their corresponding captions. Therefore CLIP probably will not be able to differentiate between a high heel and a low heel. This becomes clear when we look at the results:

Everything gets labeled as 'low heel': there's no information in the model to classify such specific feature of a shoe (I've tried different text labels as well).

At this point we've reached the limit of this zero-shot approach: it's great for 'general' knowledge questions but it won't work when we want to achieve something very specific or out of its domain.

Training or fine-tuning such a big model is possible, however you will need a huge amount of data to do so without overfitting quickly (in the range of 100k+ labeled images).

Training a heel-height classifier

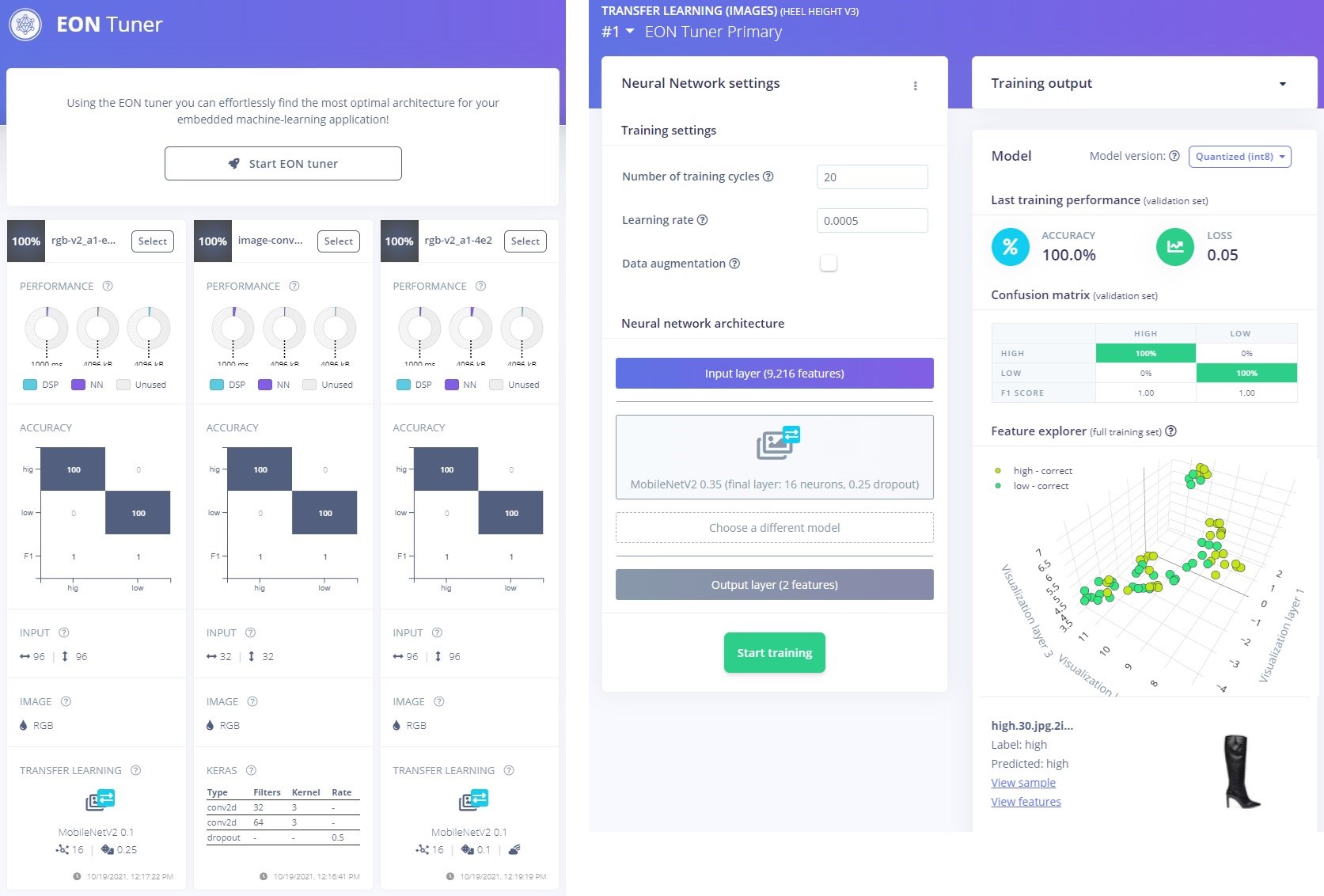

In order to train a classifier, we will do a 180 of some sort. Instead of using a huge model like clip, which needs a lot of training data, we will use a relatively small model which we can train with little data. I've manually labelled 80 product images from different types of shoes, including the ones shown in the previous examples. To train a model we will use Edge Impulse, an amazing machine learning platform for edge devices. The edge device in our case will be the browser, but they support a vast amount of embedded devices. Edge Impulse is easy to use, has great auto ML features and allows us to run the resulting model in the browser using WASM.

First we prepare our data and split it into training data and test data. And then we create an impulse, basically all we have to configure is the image size and the way the image is cropped. Now we could define our neural network architecture and it's parameters manually, but where's the fun in that? (it is fun but stay with me here)

There's an Auto ML feature called the EON tuner, which tries out various different models and searches through its parameter space to find the best one for you. After a couple of minutes, I actually had a model that was able to offer 100% accuracy the validation set, the train set and the test set. This, of course, is an amazing result but would not really be representative for any pictures 'in the wild'. The images I used are very clean product images, which makes classification easier. And apparently I did a nice job labeling the images consistently, either that or I did a bad job selecting any 'hard to classify' shoes. In any case, it would be trivial to add more examples and train the model again.

Let's turn it up a notch, I took some pictures of shoes in my house and to my surprise it was actually able to identify all of these shoes correctly. Even with a weird foot sticking out ;)

When I tried a motorcycle boot it failed. I was taking pictures inside during the night and the quality of the light wasn't the best. I decided to try with flash and then it does identify the heel height correctly.

If you DO want the model to support low light images, just take more pictures with low light, label them and retrain the model. All of this can be done easily within Edge Impulse.

Now we've have our trained heel detection model, we can start to use it to attribute products automatically! Hook it up to your PIM and you're good to go.

Can we do more? What about identifying the shoes of the customers in your store and offer them similar items on the closest display? Or perhaps classify an input image and use it to improve visual search on your website? (I feel another blogpost coming) Anyways, if you already have a small dataset with some good samples, try to experiment with machine learning, it's great fun!

Feel free to reach out to [email protected] if you would like to get access to the heel height classification project.

Recap

Most product enrichment is done manually and takes a lot of time and effort. In this post we've shown some examples on how to extract information from product images. Not only can you use this to automate attributing for new products, you can use it to update or completely change your current attributes as well (for example to create new color groups or add and remove attribute values).

Having high quality data for products can increase your brand image, improve site navigation and search and it can even help you get a better understanding of your customers needs.

If you're interested in automating your product attributing, reach out to [email protected].

Kontakta oss