Understand the user's intent and contextual meaning with semantic search

Natural Language Processing (NLP) has been one of the hot topics for a while now. There have been huge improvements in both natural language understanding (NLU) and natural language generation (NLG) recently. Google has seen the biggest improvement in search query understanding this year and content written by machines has skyrocketed.

"BERT will help Search better understand one in 10 searches in the U.S. in English"

Being able to understand what someone is trying to say is not easy, natural language contains a lot of ambiguity which can be hard to understand for a human, let alone a machine. In order to ‘process’ a sentence you have to read the whole sentence and you often need some context in order to make sense of it all. For example:

"He was almost done writing when Brian realized he forgot to introduce himself."

Somewhere in the middle of the sentence you notice that the first ‘He’ refers to ‘Brian’, this sentence has more complexity to it, but fortunately our brain is trained to understand it. Understanding the actual intent of a person's search query is a hard problem that, to date, has not been solved yet. For example, think about how you come up with your search queries on Google. For a long time having ‘Professional Googling skills’ meant: being able to distill keywords from your search intent, leave out generic words, prevent the necessity of context and be sure to provide enough specificity. There are even courses that teach you How to Google.

Basically, we’ve all been trained to cope with the weaknesses of the existing search algorithms, I think it’s quite fun to think about that fact, as we now all have a ‘custom search algorithm’ in our head. With recent advancements in understanding language, these skills may have become less important. Search engines will be able to understand complete sentences instead of strategically placed keywords.

Understanding the meaning of a query is a huge part of what a search engine is about. With the recent advancements in NLU Google said it's now able to better understand one in ten searches by using their new language model, going to the extent of calling it “one of the biggest leaps forward in the history of Search”.

The era of semantic search

Semantic search (or neural search) provides new and exciting ways to bring search functionality to a whole new level. By using neural networks it can:

- Understand intent in natural language questions

- Use an image as search input, or search by image content

- Search across languages

- Search with multiple document types as input (e.g. combined image and text query)

A lot of these functionalities are probably familiar to you already, as they are available on the large search engines. However, there are various open source options available that will allow you to implement neural search functionality yourself.

Search on intent

While writing a search query used to be a skill in its own right, with semantic search this has been simplified a lot. You can now use natural language to write your queries as a question, or search on a concept instead of very specific keywords. The neural search engine will take care of the rest, using NLP models to try and 'understand' what you are looking for and matching your intent with the available documents.

An additional benefit is that there's no need to manually fill in synonyms, the whole idea behind semantic search is that it's able to find texts that have a similar meaning.

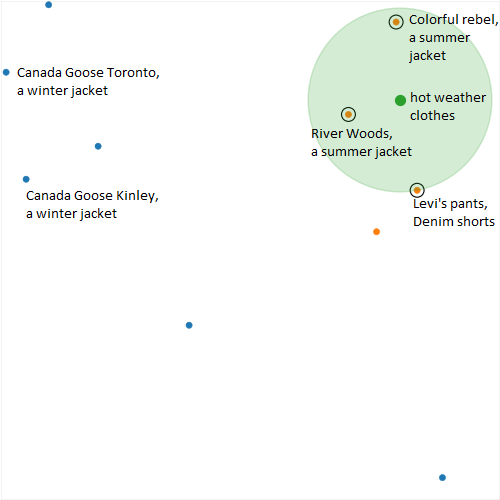

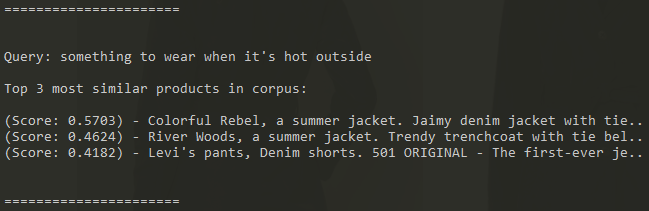

Let's look at some examples using Sentence Transformers. Say you're looking for some clothes to wear this summer, e.g. I would like to have something to wear when it's hot outside

Given a small dataset of 10 products, it returns the products that mention summer. This all happens without any complex filtering or search criteria, it is purely based on the words and their meaning.

Another example, I want some clothes to wear while skiing:

The dataset doesn't include much skiing gear, but the search does return two products made for winter conditions!

Try it out yourself on Colab: https://colab.research.google.com/drive/1v9VWjoNMXb0ah-qMA-j4nvEoiMnvRzyT?usp=sharing

Search for any document type

Another unique functionality is to search with and on any document type. For example a text search to find key points in a video, an image search to find similar images or even a combined audio and text search to find a song.

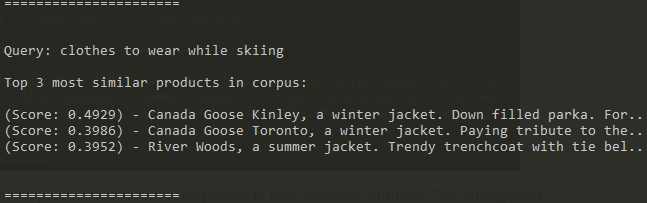

This involves encoding images, text, audio or video, into a feature vector in such a way that similar concepts are close to each other in the vector space. These vectors, or embeddings, can be calculated ahead of time and be used to build a search index. To search, we calculate the embedding for the search document of any type, and find all documents that are similar to the input.

This is Jina AI's example for Multimodal Search With TIRG & fashion200k. It allows you to search for products by image and even make modifications to the results by adding a query text.

For example, this allows you to search for a jacket but in a different color or in a different style!

Ready to try?

Deciphering search intent and contextual meaning is an interesting challenge. Search technologies are constantly improving and semantic search is a very promising area, as it offers completely new and exciting ways for you to find the things you are looking for. Advances in NLP improve understanding of the meaning of your query, crucial for improving search accuracy. As these technologies become mature, they also become available for the wider public.

Semantic search is one of the biggest advancements in search engines in years. Instead of focusing on keywords it looks for the meaning of the words used in a query and can be seen as a step towards a more natural way of searching for information, almost as if you're asking questions to a person instead of a search engine. Technological improvements have made it possible to search through different types of data as well, allowing you to search using for and with images, videos and documents.

Kontakt oss her